Salt: Combining ACID and BASE in a Distributed Database

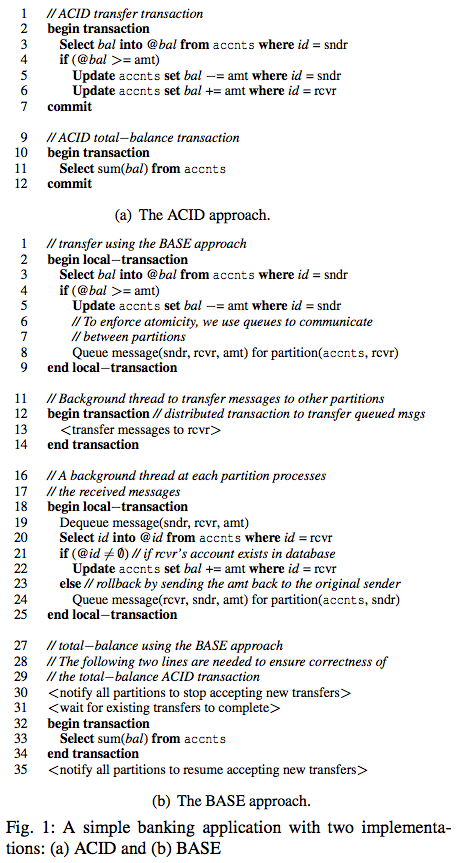

This paper appeared in OSDI'14. The authors are Chao Xie, Chunzhi Su, Manos Kapritsos, Yang Wang, Navid Yaghmazadeh, Lorenzo Alvisi, and Prince Mahajan, all from The University of Texas at Austin. Here you can watch a video of the OSDI presentation of the paper, and also find the presentation slides and the paper available as open access. USENIX knows how to do conferences right. Dropping ACID ACID (Atomicity, Consistency, Isolation, Durability) approach provides ease-of-programming through its simple transaction abstraction, but loses on the performance. BASE (Basically-Available, Soft state, Eventually consistent) approach , popularized by the NoSQL systems, provides good performance (low-latency, high-throughput, and scalability), but loses on the ease-of-programming due to increased complexity of concurrent execution in distributed systems. This paper introduces Salt, which aims to find a best-of-both-worlds middle ground: to provide high performance like BASE with mode